Generative AI for Everyone: Out of the Box Solutions

This article will introduce you to two out-of-the-box solutions for Generative AI using your own data, and they do not require coding skills. The first solution is based on Microsoft Azure and OpenAI, and it necessitates an Azure account. The second solution is PrivateGPT, an open-source GitHub project that can be run on-premises. Finally, you will find a comparison of our test results for these two approaches, as well as LangChain (which was described in our previous blog).

Showcase: Building a Digital Marketing Assistant

Our Goal

Our goal is to build a Digital Marketing Assistant powered by a Question Answering System. This system is tailored to understand queries in natural language and provide answers in the same user-friendly manner. The knowledge foundation for our assistant is derived from 33 insightful articles on digital marketing available on the Cusaas Blog: Customer Segmentation as a Service.

To ensure a seamless integration with our tools, we've initiated the process by converting each article into a ".txt" format. While many tools can directly handle HTML and PDF formats, converting to ".txt" sidesteps potential issues with encoding or line breaks.

Microsoft Azure OpenAI (Cloud Solution)

At first we build the digital marketing assistant in the cloud with the help of Microsoft technology.

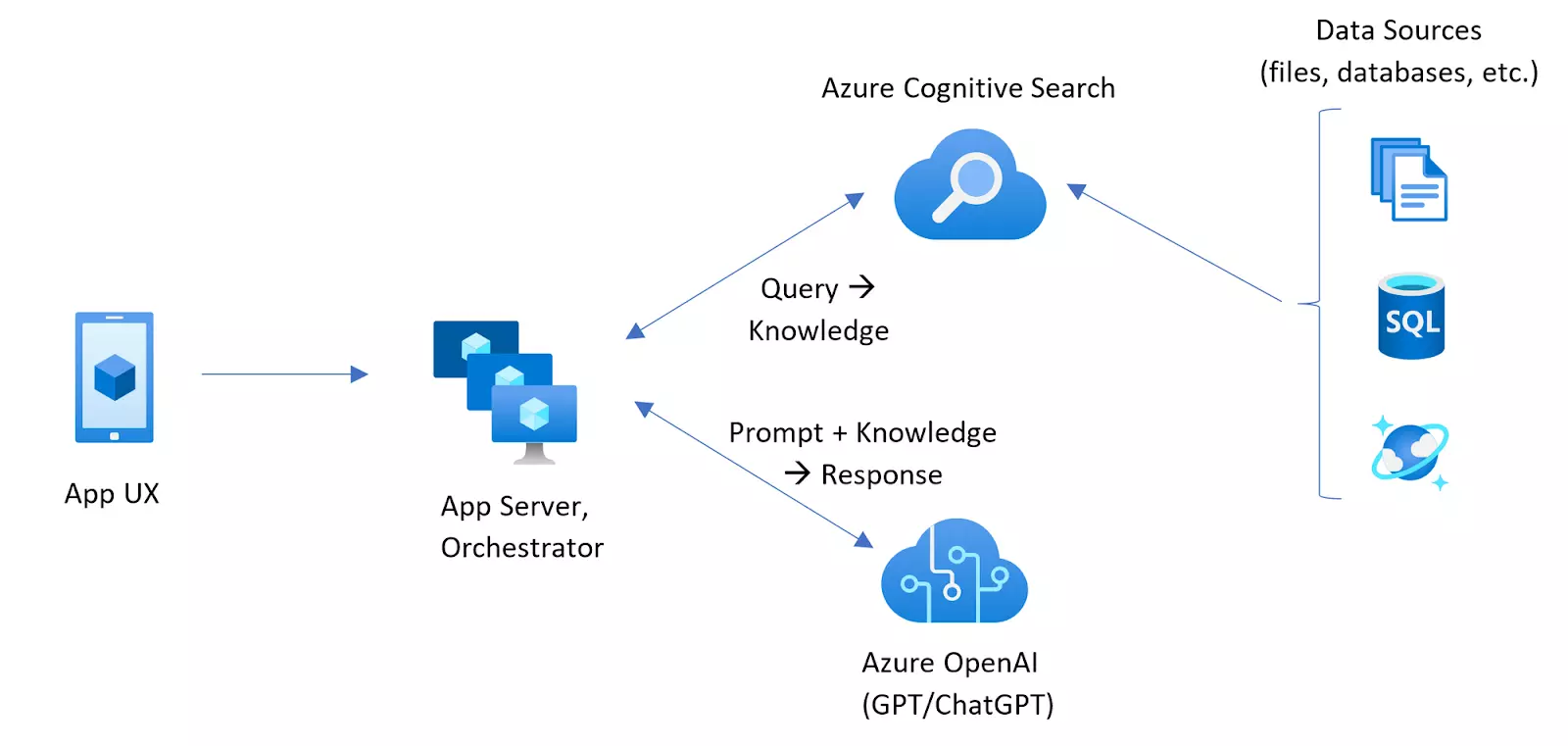

ChatGPT using MS Azure OpenAI and Azure Cognitive Search[1]

Using Azure Cognitive Search and Azure OpenAI Service, we can create a ChatGPT-similar chatbot that works with our data on Microsoft's Azure Cloud. This tool combines the best of Azure's features, helps to search through a lot of information quickly, and lets ChatGPT chat naturally.

A simple way to make ChatGPT answer questions using our data is to give it the needed information right at the start. This means ChatGPT can provide answers without having to be re-trained or adjusted. And, it can quickly use new information if our data changes.

However, there's a limit to how much information ChatGPT can handle at one time (for example, it can only manage up to 4000 pieces of information in one go). Also, it's not practical to load lots of data every time we want to ask a question. A better solution is to keep all our data in a separate place, where it's organised and easy to pull from when needed. This is where Cognitive Search comes in handy.

Tests

We have performed the next steps required for our tests:

-

Created an OpenAI Chatbot using:

- Azure Cognitive Search Service

- Azure OpenAI Service

- Azure Storage Account

- Azure Resource Group

- Selected GPT-3.5 Turbo model as LLM for our chatbot.

- Converted 33 articles from Cusaas Blog into TXT format.

- Uploaded our articles into Azure Cognitive Search and enabled semantic search.

- Entered questions about our articles.

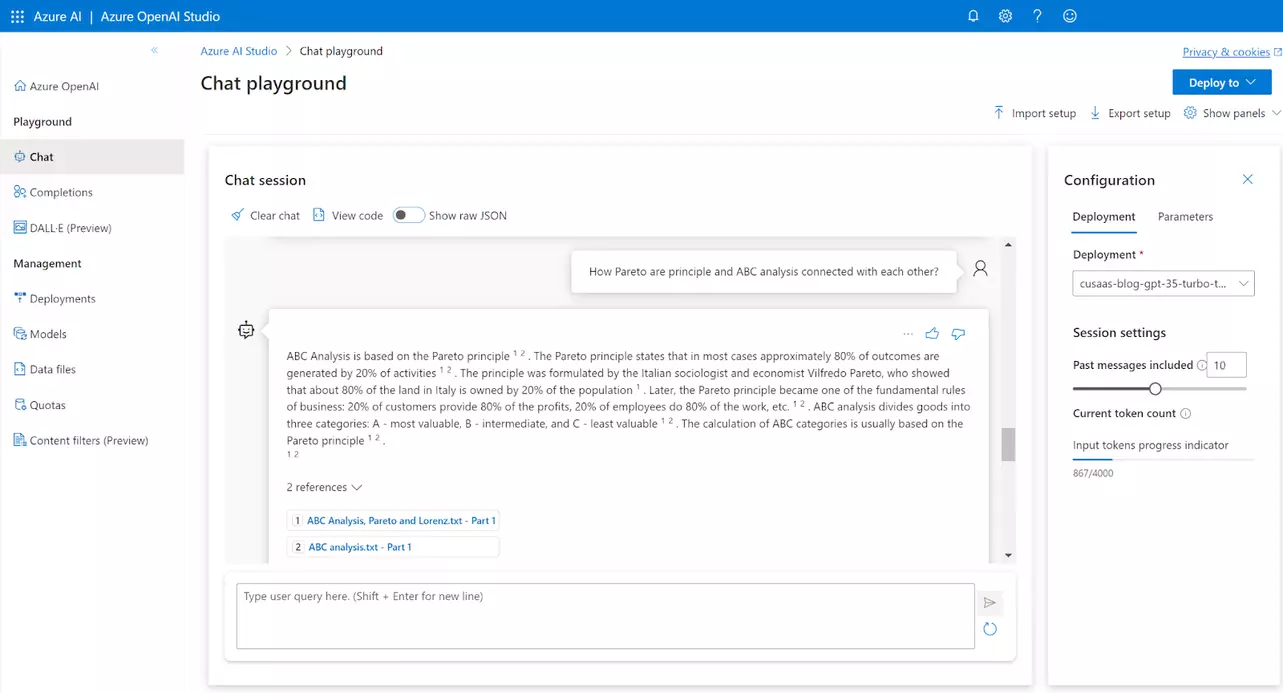

OpenAI Chatbot in Microsoft Azure Open AI Studio looks like this:

Final solution was deployed as:

- Azure Web Application

- Azure Power Virtual Agent (Chatbot)

Test results can be found in the summary table at the end of this chapter.

Conclusion

GPT-3.5 Turbo has showcased its capabilities in delivering accurate and prompt answers. With GPT-4, we anticipate even enhanced performance. Let's summarise the key takeaways:

Pros:

- Document ingestion is straightforward and swift.

- Direct import is available for common file formats: .txt, .md, .html, MS Word, MS PowerPoint, and PDF.

- GPT-3.5 Turbo consistently delivers precise answers.

- Responses are typically immediate, minimising delays.

- The system is low-maintenance and reliable.

- Being part of the Microsoft Azure Stack, it seamlessly integrates with other MS Azure services.

- The solution operates entirely on the cloud.

- It thoughtfully generates references for each statement.

Cons:

- The cloud-based nature requires entrusting Microsoft with private data. However, Microsoft assures that data will not be exposed to the public internet or be used to train LLMs further.

- Cost considerations are crucial. Large-scale projects with vast document quantities might find the per-token pricing model expensive.

- Model variety is currently limited, with only GPT, DALL-E, Embeddings, and Whisper models on offer.

PrivateGPT (On-Premises Solution)

Here, the focus is on an on-premises solution for a digital marketing assistant, tailored for enterprises handling sensitive internal data.

PrivateGPT is an open-source GitHub project dedicated to creating a confidential variant of the GPT language model. The initiative behind this project is to explore the feasibility of a completely private approach to question answering using LLMs and Vector embeddings. With PrivateGPT, queries can be posed to documents without an internet connection, leveraging the strengths of LLMs. This guarantees 100% privacy, as data remains confined within the execution environment. PrivateGPT draws from multiple technologies including LangChain, GPT4All, LlamaCpp, Chroma, and SentenceTransformers.

The project can be accessed at: GitHub - imartinez/privateGPT: Interact with your documents using the power of GPT, 100% privately, no data leaks

Accepted file types for knowledge ingestion include: .csv, .docx, .doc, .enex, .eml, .epub, .html, .md, .msg, .odt, .pdf, .pptx, .ppt, .txt.

Operational Principle:

- PrivateGPT employs LangChain tools to parse documents and produce embeddings on-site using HuggingFaceEmbeddings (SentenceTransformers). The results are then stored in a local vector database via the Chroma vector store.

- Subsequently, a local LLM, which is based on GPT4All-J (a wrapper in LangChain) or LlamaCpp, is utilised to comprehend questions and generate answers. The context for these responses is sourced from the local vector store using a similarity search, pinpointing the precise context from the documents.[2]

Tests

The tests were conducted using the following hardware configuration: 11th Gen Intel® Core™ i7-11370H @ 3.30GHz with 16 GB RAM.

The testing process was as follows:

- The PrivateGPT project was downloaded from GitHub.

- Three open-source GGML LLM models, intended for testing, were downloaded.

- The settings of PrivateGPT were modified in the .env file.

- 33 articles from the Cusaas Blog were converted into TXT format.

- These documents were ingested into PrivateGPT using the command line.

- PrivateGPT was initiated from the command line, and queries regarding the ingested documents were posed.

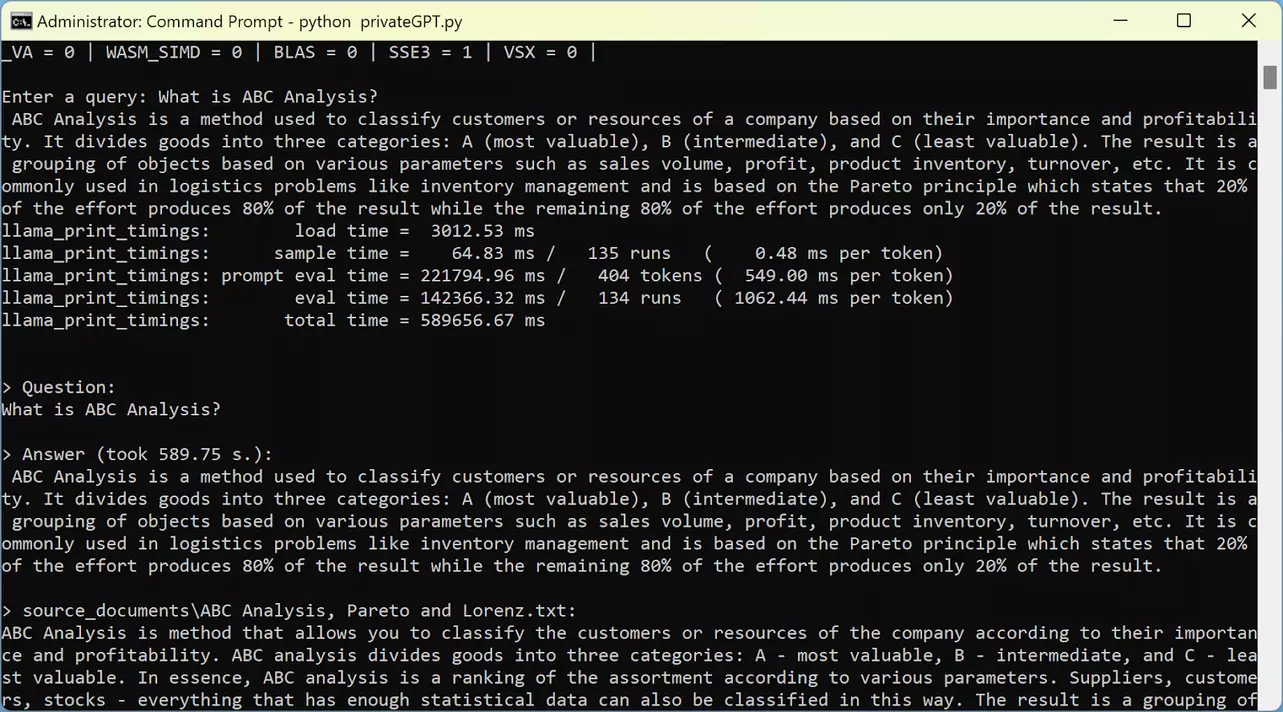

The following screenshots shows the PrivateGPT command line user interface:

The models we evaluated include:

- GPT4All (ggml-gpt4all-j-v1.3-groovy): This is the default model for PrivateGPT. Its performance is superior to Koala 7B but lags behind Vicuna 13B. The quality of its responses is moderate.

- Vicuna 13B (ggml-vic13b-q5_1): Among the three models tested, this one delivered the highest quality in generative responses. However, its processing speed is slower compared to the others.

- Koala 7B: This model yielded the least satisfactory results, producing answers of relatively low quality. While we wouldn't recommend it based on quality, its redeeming feature is its faster processing speed compared to the other models.

Our assessment showed that the "Vicuna 13B" (ggml-vic13b-q5_1) model provided the most accurate responses to our queries. Comprehensive test results are available in the summary table at the conclusion of this chapter.

Conclusion

PrivateGPT, in its current state, is best suited for experimental purposes and is not yet primed for production. While it's a work in progress and might have occasional bugs, its future prospects seem promising. For those looking for a more robust solution, we suggest exploring the commercial version of PrivateGPT offered by Private AI:

PrivateGPT: The Privacy Layer for ChatGPT by Private Ai

Pros:

- Uploading documents is straightforward and swift.

- It supports a broad range of document types.

- When equipped with the right model (like Vicuna 13B), it delivers highly accurate responses.

- Prioritises user privacy as it operates on-premise (locally on the server) without needing an internet connection.

- Being open-source and based on LangChain, it's free of charge.

- It's versatile and can work with any open-source model.

Cons:

- The process of generating answers can be time-consuming. For instance, using the "Vicuna 13B" model, responses typically took between 3–5 minutes.

- Lack of GPU acceleration support.

- Stability can sometimes be an issue, leading to occasional errors or non-responses.

- As it's an open-source project, it doesn't come with dedicated external support.

Results comparison: MS OpenAI, PrivateGPT, LangChain

Below is a comparative table outlining the three approaches discussed previously:

| What is ABC Analysis? | |

|---|---|

| MS OpenAI GPT-3.5 Turbo 154B | ABC Analysis is a method that allows you to classify the customers or resources of the company according to their importance and profitability. ABC analysis divides goods into three categories: A - most valuable, B - intermediate, and C - least valuable. The calculation of ABC categories is usually based on the Pareto principle, which states that in most cases approximately 80% of outcomes are generated by 20% of activities. ABC analysis is also based on the principle of imbalance, and when it is performed, a graph of the dependence of the total effect on the number of elements considered is plotted. It is called the Lorenz curve. ABC analysis is typically used to track the shipment volume of certain goods and the frequency of call-offs, as well as to classify customers by the number or volume of orders they place. |

| PrivateGPT Vicuna 13B | The Pareto principle is a key underlying concept behind ABC analysis. In fact, the name "ABC" itself comes from the first letters of the three categories that are created through the analysis: A (the most important), B (less important), and C (least important). These categories are determined based on the value or impact each item has on the overall system, which is often measured in terms of its contribution to revenue or profit. The idea behind ABC analysis is that by focusing on the most important items (the A items), a company can improve efficiency and reduce costs while still maintaining a high level of performance. In this way, ABC analysis can be seen as a practical application of the Pareto principle in business management. |

| LangChain LLaMA 2 13B Q6K | The Pareto principle is the foundation for ABC analysis, as it states that 20% of resources (e.g. products, customers, employees) generate 80% of results. This principle is used to categorize resources into ABC categories based on their importance and profitability. |

| How Pareto principle and ABC analysis are connected to each other? | |

|---|---|

| MS OpenAI GPT-3.5 Turbo 154B | BC Analysis is based on the Pareto principle. The Pareto principle states that in most cases approximately 80% of outcomes are generated by 20% of activities. The principle was formulated by the Italian sociologist and economist Vilfredo Pareto, who showed that about 80% of the land in Italy is owned by 20% of the population. Later, the Pareto principle became one of the fundamental rules of business: 20% of customers provide 80% of the profits, 20% of employees do 80% of the work, etc.. ABC analysis divides goods into three categories: A - most valuable, B - intermediate, and C - least valuable. The calculation of ABC categories is usually based on the Pareto principle. |

| PrivateGPT Vicuna 13B | The Pareto principle is a key underlying concept behind ABC analysis. In fact, the name "ABC" itself comes from the first letters of the three categories that are created through the analysis: A (the most important), B (less important), and C (least important). These categories are determined based on the value or impact each item has on the overall system, which is often measured in terms of its contribution to revenue or profit. The idea behind ABC analysis is that by focusing on the most important items (the A items), a company can improve efficiency and reduce costs while still maintaining a high level of performance. In this way, ABC analysis can be seen as a practical application of the Pareto principle in business management. |

| LangChain LLaMA 2 13B Q6K | The Pareto principle is the foundation for ABC analysis, as it states that 20% of resources (e.g. products, customers, employees) generate 80% of results. This principle is used to categorize resources into ABC categories based on their importance and profitability. |

| What do actionable metrics of cohort analysis help to identify? | |

|---|---|

| MS OpenAI GPT-3.5 Turbo 154B | Actionable metrics of cohort analysis help to identify the real situation, make decisions, and improve the business. They are metrics that link repeatable actions to observable outcomes, such as user registration followed by a purchase. By analyzing these metrics across cohorts, companies can better tailor their business to specific customer groups. In the context of customer analytics, actionable metrics of cohort analysis can help identify patterns in customer behavior at different stages of the customer lifecycle. This allows companies to make more informed decisions about how to optimize performance, such as increasing sales or reducing customer churn. |

| PrivateGPT Vicuna 13B | Actionable metrics from cohort analysis can help to identify patterns in customer behavior specific to a particular cohort or group of customers, which can inform better business decisions and targeted marketing strategies for that specific group. |

| LangChain LLaMA 2 13B Q6K |

Actionable metrics of cohort analysis help to identify trends, patterns, and

insights in customer behavior. These metrics can include:

|

The most compelling responses were obtained using GPT-3.5 and LLaMA 2 13B Q6K. Answers from LLaMA 2 tend to be more concise and precise. This precision can be adjusted through Prompt Engineering, allowing one to specify, in natural language, the desired length of the response, such as the number of sentences or words.

It's important to note that while GPT-3.5 Turbo is a proprietary and resource-intensive LLM, LLaMA 2 from Meta is entirely open-source, free, and comparatively lightweight. For perspective, GPT-3.5 Turbo boasts 154 billion parameters, in contrast to LLaMA's 13 billion. Utilizing the LLaMA 2 70B version, which has 70 billion parameters, can yield even better results, though it demands significantly more RAM.

Enterprise AI in Action Whitepaper

Thanks! Here is your download-link:

References

- [1] Revolutionize your Enterprise Data with ChatGPT: Next-gen Apps w/ Azure OpenAI and Cognitive Search

-

[2] PrivateGPT:

A Promising Project for Protecting Privacy and Ensuring Data Confidentiality

GitHub - imartinez/privateGPT: Interact with your documents using the power of GPT, 100% privately, no data leaks